LangGraph is a powerful framework that extends the capabilities of LangChain by introducing a graph-based orchestration model for AI workflows. While LangChain popularized modular pipelines for chaining together LLM prompts, memory, tools, and agents, LangGraph brings a structured, declarative approach to designing complex, multi-step, and branching AI workflows — making it ideal for building robust, production-ready Agentic AI systems.

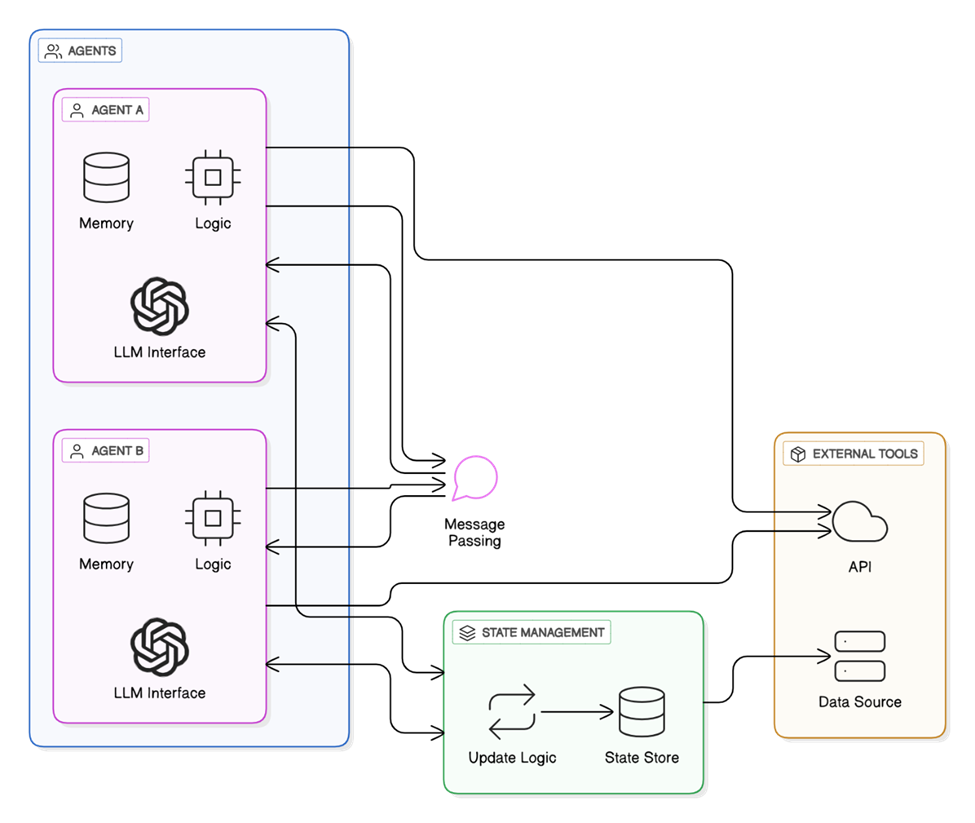

At its core, LangGraph enables developers to define state machines or directed graphs, where:

- Nodes represent actions (e.g., invoking an LLM, calling an API, querying a database),

- Edges define transitions between states based on context, conditions, or outputs.

This graph structure makes it easy to:

- Model workflows that require conditional logic and looping (e.g., retry mechanisms, approval processes),

- Handle dynamic task routing based on real-time inputs or intermediate results,

- Visualize and reason about the flow of complex, multi-agent systems.

Key Concepts of LangGraph:

- Nodes: Encapsulate operations such as prompting an LLM, fetching external data, calling a plugin or service, etc.

- Edges: Define conditional transitions based on the output of a node.

- Graph memory/state: Maintains contextual information as an agent traverses the graph, enabling stateful orchestration and adaptive decision-making.

- Execution Engine: Executes the workflow deterministically or dynamically based on defined policies.

Why LangGraph?

In traditional LangChain workflows, chaining steps linearly can become limiting when workflows require:

- Branching logic (e.g., “If user intent is A, go to branch X; if B, go to branch Y”),

- Nested workflows and recursive patterns,

- Resumable executions and fault tolerance.

LangGraph solves this by representing workflows as state transition systems, which is particularly useful for:

- Agentic AI implementations with feedback loops,

- Complex multi-turn dialogue management,

- Business process automation workflows with approvals and conditionals.

Example Use Cases:

- A Customer Service Agent that intelligently routes between different support flows based on issue type and resolution status.

- A Content Production Agent that drafts, reviews, edits, and schedules articles automatically, with branches for escalation if editorial standards aren’t met.

- A Research Assistant Agent that iteratively queries knowledge bases, refines searches, summarizes content, and compiles references — adapting its workflow based on intermediate findings.

LangGraph vs. LangChain:

| Feature | LangChain | LangGraph |

| Workflow Structure | Linear chains of steps | Directed graph/state-machine architecture |

| Conditionals & Branching | Manual handling via Python logic | Native support with declarative API |

| Visualization | Limited | Workflow graph structure is naturally visual |

| Adaptability & Flexibility | Moderate | High — supports complex adaptive workflows |

| Ideal For | Simple LLM applications | Complex, multi-step, stateful workflows |

Benefits for Agentic AI Systems:

- Declarative workflow design: Developers can focus on defining state transitions rather than procedural code.

- Resilience: Easier error handling, retry strategies, and fallback paths.

- Reusability: Nodes and graphs can be reused as components across projects.

- Scalability: Well-suited for orchestrating multiple LLMs, APIs, databases, and tools in a coherent, traceable workflow.

In summary, LangGraph is the orchestration engine that powers the next generation of sophisticated, autonomous AI agents. It empowers developers to build workflows that mirror real-world complexity — making AI systems more adaptable, explainable, and production-ready.