What is Vibe Coding?

Vibe Coding refers to the deliberate design and implementation of tone, personality, and emotional nuance in AI-generated outputs. Unlike traditional text generation that focuses solely on factual correctness or grammatical structure, Vibe Coding ensures that AI responses reflect a specific “vibe” — the emotional color and communication style best suited for the interaction and audience.

At its core, Vibe Coding allows AI systems to communicate not just with words, but with intentional tone and personality, making interactions feel more natural, human, and relatable.

Examples of Common “Vibes” in AI Communication:

- Friendly: Warm, approachable, casual language that builds rapport.

Example: “Hi there! Great question — happy to help you with that! 😊”

- Empathetic: Sensitive, understanding tone, ideal for customer support or emotionally charged contexts.

Example: “I’m really sorry to hear that happened. Let’s get this sorted together.”

- Professional: Polished, formal, and respectful language appropriate for business and corporate settings.

Example: “Thank you for your inquiry. I will review this and get back to you promptly.”

- Humorous: Light-hearted tone that uses humor or playfulness to engage.

Example: “Looks like your internet took a nap — let’s wake it up together! 🕹️”

- Formal/Informal Balance: Adjusting communication style based on audience demographics or cultural expectations.

Why Vibe Coding Matters in Customer-Facing AI

In customer interactions, tone is as important as content. A response that’s factually correct but feels cold or robotic can erode trust, reduce engagement, or fail to de-escalate frustration. By contrast, an AI that mirrors the right tone can build rapport, defuse tension, and enhance user satisfaction.

In industries like retail, healthcare, and financial services, adopting the right “vibe” improves brand consistency and provides a seamless customer experience across channels — from chatbots and virtual assistants to marketing copy and automated emails.

Comparison with Plain Text Generation

| Aspect | Plain Text Generation | Vibe Coded Generation |

| Focus | Content correctness and factual response | Content + tone + emotional nuance |

| Style | Neutral, factual | Context-sensitive, expressive |

| Personalization | Minimal | High, tailored to audience or situation |

| Use case | Generic answers, documentation | Customer support, sales, marketing, digital UX |

| Example Response | “Your order will arrive tomorrow.” | “Great news! Your order is on its way and should arrive tomorrow — we hope you love it!” |

Plain text alone may suffice for knowledge retrieval tasks, but customer-facing interactions increasingly demand emotional intelligence from AI. Vibe Coding bridges this gap, ensuring AI systems do more than answer—they engage.

Techniques for Implementing Vibe Coding

Implementing Vibe Coding is not simply about instructing an AI to “sound friendly” — it requires thoughtful design, consistent execution, and often technical strategies to embed tone and personality into AI-generated outputs. Below are key techniques to make this possible:

Prompt Engineering for Tone and Style

The simplest and most widely used method:

- Carefully craft prompts that instruct the Large Language Model (LLM) to adopt a particular tone.

Example prompts:

- Friendly: “Answer the user’s question in a warm and approachable tone. Use emojis where appropriate.”

- Empathetic: “Respond with empathy, acknowledging the customer’s feelings and offering supportive language.”

- Professional: “Draft a formal and concise response suitable for a corporate environment.”

Tip: Explicitly including the tone or style descriptor directly in the prompt often yields good results without additional tooling.

Reusable Prompt Templates

To ensure consistency, organizations can maintain a library of reusable templates pre-configured for different vibes and scenarios.

Example:

Template: “Respond as a {tone} AI assistant. The conversation should feel {personality descriptor}. Provide clear, concise, and helpful answers.”

Benefits:

- Reduces prompt design effort.

- Enables a standardized tone across teams and applications.

- Easily integrated into API-driven workflows (e.g., LangChain, n8n).

Dynamic Vibe Adaptation

Personalize the vibe dynamically based on:

- User profile data: New users vs. loyal customers.

- Sentiment detection: Adjust tone when frustration or negative sentiment is detected.

- Channel context: Informal tone for chat; formal tone for email.

This can be implemented using:

- Sentiment analysis APIs

- Conditional prompt logic

- User preference history (stored in memory/context)

Example logic:

If user sentiment == negative:

Use empathetic tone prompt.

Else:

Use friendly tone prompt.

Fine-Tuning LLMs on Stylistic Data

Beyond prompt engineering, organizations can fine-tune their LLMs on tone-specific datasets:

- Training examples showcasing preferred tone and language style.

- Brand voice guides encoded into the fine-tuning corpus.

Benefits:

- Reduces reliance on prompt instructions.

- Produces tone-consistent responses even with short prompts.

Style Transfer Pipelines

Post-process AI output with additional models or rules that adjust:

- Word choice

- Sentence structure

- Politeness level

- Use of emoji, humor, or casual phrasing

Example:

- Pass LLM output through a secondary “style transformer” that softens tone or injects humor where needed.

Contextual Tone Memory

Maintain per-user tone preferences across sessions:

- Example: If a user prefers formal tone, store this in context/memory.

- Ensure every future interaction respects this setting automatically.

Tooling Support: LangChain / n8n

Use orchestration frameworks that allow tone-aware workflows:

- LangChain: Define different chains or routes for different vibes.

- n8n: Conditional nodes that choose which vibe-coded prompt to use based on input context.

Best Practice Summary:

- Combine static tone templates + dynamic adaptation for rich personalization.

- Align vibe coding with brand voice guidelines for consistency.

- Test tone effectiveness with user feedback loops (A/B testing different vibes).

- Always ensure tone does not compromise clarity, accessibility, or appropriateness.

What is Context Engineering?

Context Engineering refers to the deliberate design and management of relevant information—context—that informs how AI systems interpret inputs, generate outputs, and execute tasks. It is the practice of ensuring AI workflows are aware of surrounding information so that their responses and actions feel coherent, relevant, and personalized.

In modern conversational AI and agentic systems, context goes beyond just handling a single query—it enables continuity, personalization, and adaptability across multiple interactions or steps in a workflow.

Without proper context, AI models risk behaving like isolated question-answering machines, lacking awareness of prior exchanges, user identity, or task state. Context Engineering bridges this gap, turning reactive systems into intelligent, stateful agents.

Core Concept

At its heart, Context Engineering answers:

“What should the AI know right now to behave intelligently in this situation?”

It involves:

- Capturing context from various sources.

- Storing it efficiently (short-term or long-term memory).

- Injecting it into LLM prompts or agent workflows at the right time.

Types of Context:

User History

- Definition: Information about prior interactions with a user.

- Examples:

- Past questions asked.

- Purchase history.

- Known preferences (e.g., preferred language, tone, or interests).

- Use case: Enables personalization—“Welcome back, John! Last time you asked about X…”

Session Metadata

- Definition: Information about the current interaction session.

- Examples:

- Session ID.

- Device being used (mobile, desktop).

- Current task state in a multi-step workflow.

- Use case: Provides continuity during a single session—“Let’s continue where you left off…”

Real-Time Environmental Cues

- Definition: Context from the environment or platform.

- Examples:

- Geolocation data.

- Current time zone.

- Device capabilities.

- Use case: Tailors responses based on environment—“It looks like it’s evening in your time zone; would you like a summary instead?”

Context as Memory for Conversational and Agentic Systems

In conversational and agentic AI, context is the functional equivalent of memory:

- In short-term memory, context ensures continuity during a chat or workflow session (e.g., knowing what “it” refers to in “When will it arrive?”).

- In long-term memory, context allows AI to retain and recall facts about users over time (e.g., remembering that a user prefers formal responses or has purchased a particular product before).

This memory enables agents to:

- Reduce repetition for users.

- Maintain consistent personalization.

- Reason over multi-step tasks intelligently.

Why Context Engineering is Essential

- Enhances user experience by making AI interactions feel human-like and coherent.

- Enables personalization and relevance without repeatedly asking users for information.

- Supports multi-step workflows and autonomous agents that must manage state, history, and evolving goals.

Context Engineering is not just a backend optimization—it’s a fundamental design discipline that transforms AI from static responders into adaptive, intelligent collaborators.

Techniques for Implementing Context Engineering

Session Context Management

- What it is: Maintain user-specific context during a session (e.g., active conversation) to ensure continuity.

- How to implement:

- Store session state using session identifiers (e.g., tokens, cookies).

- Use memory frameworks (e.g., LangChain’s memory classes or Redis for state storage).

- Track entities, topics, intents throughout a session.

Example: In a customer support chatbot, if a user asks about an order and then asks “Where is it?”, the bot should infer that “it” refers to the order from earlier in the session.

Persistent User Profiles

- What it is: Store long-term information about users to personalize experiences over time.

- How to implement:

- Use databases (e.g., DynamoDB, MongoDB) to maintain attributes like preferences, purchase history.

- Retrieve/update profile data at the start/end of interactions.

- Ensure GDPR/CCPA compliance for data storage.

Example: An AI shopping assistant remembers a user’s shoe size and style preferences across sessions.

Contextual Metadata Injection

- What it is: Enrich prompts or API requests with metadata like device type, location, time of day.

- How to implement:

- Detect metadata dynamically (e.g., user-agent for device type, IP geolocation).

- Inject this metadata into prompt templates or workflow variables.

Example: A travel assistant provides different suggestions depending on whether the user is on mobile (likely traveling) or desktop (likely planning).

Knowledge Graph Integration

- What it is: Use knowledge graphs to model relationships between entities and concepts relevant to the user or domain.

- How to implement:

- Query knowledge graphs (e.g., Neo4j, AWS Neptune) during conversation to enrich answers.

- Cache graph nodes and edges to improve latency.

Example: For a healthcare agent, contextually relate symptoms, diagnoses, and treatments dynamically.

Context Windows for LLMs

- What it is: Manage what parts of previous conversation history are included in the current prompt.

- How to implement:

- Apply recency-based truncation: prioritize most recent exchanges.

- Use salience scoring to include only key facts (e.g., names, dates, preferences).

- Chunk and summarize long histories when token limits are a constraint.

Example: In ChatGPT-like assistants, a rolling window ensures recent relevant information is available without overwhelming the prompt.

Contextual Intent Switching Handling

- What it is: Detect when user intent has changed and appropriately reset or adjust context.

- How to implement:

- Train intent classifiers (e.g., with spaCy, Rasa) to identify topic switches.

- Automatically clear or suspend context if a different intent is detected.

Example:

A user inquiring about banking balances suddenly asks about ATM locations — the assistant correctly recognizes the change.

Multi-Channel Context Synchronization

- What it is: Maintain consistent context when a user interacts over different channels (e.g., web, mobile app, WhatsApp).

- How to implement:

- Use centralized context storage (e.g., Firebase Realtime Database or backend APIs).

- Ensure consistent session IDs or user identifiers across channels.

Example:

A user starts an inquiry on WhatsApp and completes it on the website without needing to repeat information.

Real-Time Environmental Context Awareness

- What it is: Adapt responses based on real-time environment signals (e.g., ambient noise, location, weather).

- How to implement:

- Integrate IoT sensor data, environment APIs (e.g., OpenWeatherMap for weather conditions).

- Pass environment variables as context to AI prompts/workflows.

Example:

A restaurant assistant offering outdoor dining suggestions takes current weather into account.

Contextual Memory APIs / Frameworks

- Use libraries and frameworks built for this purpose:

- LangChain memory classes: ConversationBufferMemory, ConversationSummaryMemory.

- Microsoft Semantic Kernel: Supports memory plugins for contextual interaction.

- Rasa Tracker Store: Manages dialogue state and history.

Summary of Best Practices:

- Choose short-term vs. long-term context storage consciously.

- Avoid unnecessary context carryover that causes irrelevant memory leakage.

- Ensure privacy, consent, and transparency when persisting user data.

- Monitor context-handling performance as it affects latency and quality of response.

- Treat context engineering as a first-class design concern for any conversational or agentic AI system

Vibe Coding & Context Engineering in Practice

Combining tone and context for a rich user experience is at the heart of modern AI-driven interactions. While Vibe Coding ensures the AI speaks in a tone that resonates emotionally with the user (e.g., friendly, empathetic, professional), Context Engineering ensures the AI understands the situational background to respond meaningfully and relevantly. Together, they create an intelligent, adaptive, and human-like experience.

In practice, this combination goes beyond mere text generation — it shapes user trust, engagement, and satisfaction.

Real-World Use Cases

Customer Service Chatbots

Modern customer service bots increasingly rely on vibe coding and context engineering to provide seamless and satisfying support:

- Tone personalization: An empathetic tone when a customer is frustrated vs. a cheerful, casual tone when they are browsing or asking FAQs.

- Contextual relevance: Maintaining conversation history (e.g., past orders, issue details) ensures the bot understands returning users and avoids asking repetitive questions.

Example:

A telecom chatbot recognizing a returning user who previously reported a connectivity issue greets them warmly and proactively updates them on the status — in a reassuring tone.

AI Sales Assistants

In sales interactions, tone and context significantly impact conversion rates:

- Vibe Coding: Adjusting style to match buyer persona — formal for enterprise customers, casual for younger shoppers.

- Context Engineering: Leveraging purchase history, preferences, and browsing behavior to recommend relevant products and make upsell suggestions.

Example:

An AI assistant for an e-commerce store notices that a customer often shops for outdoor gear and enthusiastically suggests complementary items, addressing them by name and using language that mirrors their style.

AI-Driven Coaching Tools

Digital coaching platforms (e.g., wellness, fitness, career guidance) increasingly require nuanced communication:

- Vibe Coding: Encouraging and motivational tone during progress check-ins, empathetic tone when users struggle.

- Context Engineering: Tracking user history, preferences, and goals to provide personalized advice.

Example:

A fitness coaching AI recognizes that a user has been inactive for a week and responds with an understanding, supportive tone:

“I know this week might have been tough — but let’s get back on track together! Here’s a gentle routine to restart.”

Key Benefits of Combining Vibe Coding & Context Engineering:

- Richer, more human-like conversations.

- Greater user satisfaction and engagement.

- Higher retention and loyalty due to personalized experiences.

Architectural Patterns for Vibe Coding & Context Engineering

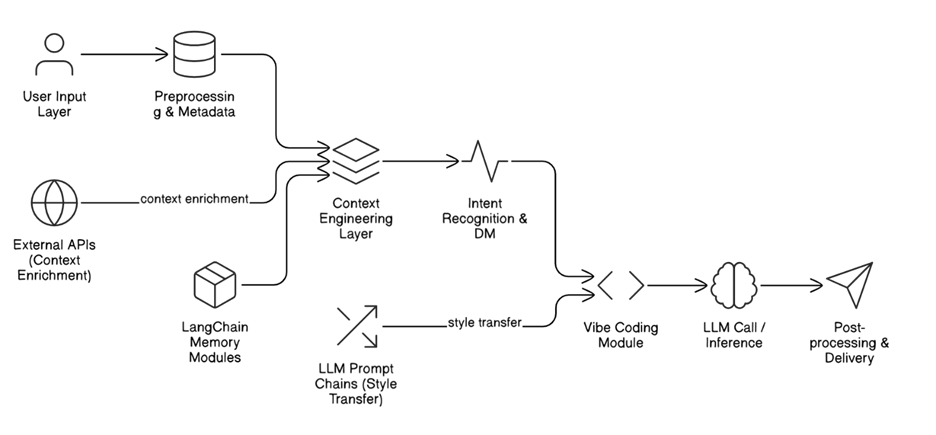

Implementing vibe coding and context engineering effectively requires thoughtful architectural patterns that integrate these capabilities into the broader AI workflow. This involves designing modular, scalable components that manage tone, context, memory, and enrichment — all while maintaining responsiveness and adaptability.

Where Vibe and Context Modules Fit into AI Workflow Architecture

In a typical AI-driven conversational or agentic system, the architecture can be visualized as layered components:

Input Layer

- Captures user input via text, speech, or multimodal channels.

Preprocessing Layer

- Normalizes input and extracts key metadata (e.g., user device, location, session ID).

Context Engineering Module

- Injects short-term and long-term context into the conversation workflow:

- Session history.

- User profiles.

- Environmental context.

Intent Recognition & Dialogue Management

- Determines user intent and orchestrates next actions.

Vibe Coding Module

- Selects the appropriate tone/style for the response:

- Friendly, empathetic, formal, humorous, etc.

- Uses templates or style-transfer mechanisms.

LLM Invocation / Prompt Assembly

- Builds the final prompt incorporating both context and vibe before passing it to the LLM.

Response Postprocessing & Output

- Final refinements and delivery to user.

Tools and Frameworks

LangChain Memory Modules

- Purpose: Manage short-term and long-term memory.

- Use case: Retain conversational history or user profile for contextual responses.

LLM Prompt Chains for Style Transfer

- Purpose: Structure prompts to apply different “vibes” or tones programmatically.

- Use case: Customize language style dynamically for different users or scenarios.

External APIs for Context Enrichment

- Purpose: Retrieve real-time environmental data (e.g., weather, location) or user attributes from CRM databases.

- Use case: Enrich context before prompt submission to improve relevance.

Example Diagram: Integration Points for Vibe & Context Modules

Here’s a description of an example diagram you can visualize or implement graphically:

Key architectural insights:

- Loose coupling: Vibe Coding and Context Engineering should be modular, enabling reuse and easy replacement as tools evolve.

- Centralized context store: A unified memory system ensures consistency across different channels and sessions.

- Prompt orchestration: A clear separation between prompt construction (style + context) and inference makes workflows more maintainable.

Sample JSON structure for n8n representing an AI workflow that integrates Vibe Coding and Context Engineering concepts.

This workflow assumes:

- HTTP Request node for user input capture (e.g., from API/webhook).

- Set nodes for preprocessing and context enrichment.

- External API calls for context enrichment (e.g., user profile or weather).

- Vibe Coding implemented as a template step before sending the request to the LLM API (e.g., OpenAI node).

- Final post-processing before responding.

Sample n8n Workflow JSON

Explanation:

- Webhook Trigger: Starts workflow on incoming user message.

- Preprocessing: Captures metadata (e.g., device type).

- Fetch User Profile: Context enrichment from external API.

- Vibe Coding Template: Builds a prompt that includes user preferences and desired tone.

- LLM Inference: Calls OpenAI API to generate response.

- Respond to User: Sends the LLM’s response back to the user.

Best Practices for Vibe Coding & Context Engineering

Designing AI systems that successfully combine tone and context requires careful attention to consistency, user trust, ethical considerations, and graceful degradation when context is imperfect. Below are key best practices to guide implementation.

Tone Consistency Across Different Interaction Channels

- Why it matters:

Users increasingly interact with AI assistants across multiple touchpoints (e.g., website, mobile app, WhatsApp, email). Inconsistent tone or style across channels can undermine trust and reduce the perception of a unified brand experience.

- How to achieve it:

- Define a “tone-of-voice playbook” that formalizes language, tone, and style guidelines.

- Use centralized templates or style-transfer prompt libraries to ensure tone alignment.

- Test responses across different channels to detect tone drift and optimize phrasing for channel characteristics (e.g., brevity for SMS vs. expressiveness for web chat).

Handling Context Securely (Privacy, GDPR, etc.)

- Why it matters:

Context Engineering involves storing and processing potentially sensitive user data (e.g., preferences, location, behavioral history). Mishandling this data can lead to privacy violations and regulatory penalties.

- How to achieve it:

- Implement data minimization: Only collect and store context strictly necessary for the service.

- Apply strong encryption for data in transit and at rest.

- Ensure compliance with regional regulations (e.g., GDPR, CCPA):

- Provide transparency about what context data is captured.

- Offer users mechanisms to view, correct, or delete their data.

- Use pseudonymization or anonymization where possible for long-term profiling.

Fall-Back Strategies When Context Is Incomplete

- Why it matters:

Context Engineering systems inevitably encounter scenarios where user history, metadata, or preferences are missing or ambiguous. Without graceful fallback mechanisms, this can degrade the user experience.

- How to achieve it:

- Design conversational fallbacks that politely clarify context (“Could you tell me a bit more so I can assist?”).

- Default to safe, generic responses that are still helpful even when personalization is unavailable.

- Use historical population-level defaults or heuristics to approximate context when user-specific data is lacking.

- Avoid making incorrect assumptions based on sparse context — it’s better to ask explicitly.

Personalization Without Overstepping Boundaries

- Why it matters:

Hyper-personalization can cross into invasive territory, making users uncomfortable and eroding trust if AI assistants appear “too familiar” or reference unexpected data.

- How to achieve it:

- Ensure contextual relevance: Personalization should clearly serve the user’s immediate need, not simply demonstrate how much the system “knows”.

- Avoid referencing sensitive attributes unless explicitly required and consented to.

- Provide users with clear explanations and controls over personalization features (“You can manage your preferences anytime…”).

- Respect user intent and preferences dynamically — for example, switch to a neutral tone if a user indicates discomfort.

Successful vibe coding and context engineering is not just a technical exercise — it’s a trust-building practice. By ensuring consistent tone, secure and ethical handling of context, and graceful handling of uncertainty, we can create user experiences that feel human, respectful, and reliable.

Challenges and Trade-offs

While Vibe Coding and Context Engineering unlock rich, human-like interactions, they also introduce significant challenges that architects and designers must carefully navigate. Addressing these challenges requires balancing personalization, system performance, tone consistency, and brand alignment — without compromising user trust or experience.

Risks of “Over-Personalization”

- Challenge:

Personalization enhances relevance, but excessive or intrusive personalization can feel invasive and even creepy. For example, referencing user details that were not expected (or perceived as private) may erode trust. - Trade-off:

The goal is to maximize contextual relevance while maintaining transparency and respecting user comfort zones. - Mitigation:

- Establish clear boundaries for what types of context data can be used in different interaction scenarios.

- Allow users to control personalization levels (“minimal personalization” mode).

- Prioritize contextual cues that are openly shared rather than inferred or opaque.

Performance vs. Memory Size Trade-offs

- Challenge:

Context Engineering often involves maintaining memory across sessions, users, and channels. Storing and retrieving large context histories can degrade performance, increase latency, and impact scalability. - Trade-off:

Deeper context improves personalization quality but requires careful memory management to avoid performance bottlenecks. - Mitigation:

- Apply context windowing (e.g., recent history prioritization).

- Summarize or distill long histories instead of replaying full transcripts.

- Use hybrid storage strategies (e.g., in-memory cache for active sessions + database for long-term context).

Tone Mismatch Risks in Multi-lingual Settings

- Challenge:

Vibe Coding depends heavily on linguistic nuance and cultural sensitivity. A tone that feels “friendly” in one language may feel informal or disrespectful in another. - Trade-off:

Striving for tone consistency globally may result in unintended misinterpretation or offense in different cultural contexts. - Mitigation:

- Localize not just content but also tone-of-voice for each language and culture.

- Engage native speakers and cultural consultants to review tone models.

- Build language-aware tone templates that adapt vibe coding rules per locale.

Guardrails for Respectful, Brand-Aligned Communication

- Challenge:

As AI becomes responsible for more customer-facing communication, maintaining brand voice, respectfulness, and appropriateness is essential. Automated systems risk deviating from these standards without proper safeguards. - Trade-off:

Guardrails may limit creativity or spontaneity but are essential to protect brand reputation and user trust. - Mitigation:

- Implement prompt engineering guidelines that encode brand tone principles explicitly.

- Use moderation and filter layers to catch inappropriate or off-brand responses before delivery.

- Continuously audit and fine-tune tone templates and vibe coding configurations.

Vibe Coding and Context Engineering amplify both the potential and the risks of AI-powered interactions. Understanding these challenges and proactively designing trade-offs ensures that AI systems remain effective, performant, culturally sensitive, and trustworthy — all while staying true to the brand’s identity.

Case Studies: Vibe Coding & Context Engineering in Action

Real-world applications of vibe coding and context engineering demonstrate their power in delivering personalized, human-like, and adaptive AI experiences. Below are three short vignettes highlighting how these principles translate into impactful use cases.

E-commerce Chatbot with Adaptive Tone

Scenario:

An online fashion retailer deploys an AI chatbot on its website and mobile app to guide customers from product discovery to checkout and post-purchase support.

How vibe coding and context engineering work together:

- The chatbot adjusts its tone dynamically based on the customer’s journey stage:

- Cheerful and casual when browsing.

- More serious and informative when handling complaints or returns.

- Excited and enthusiastic when guiding checkout.

- Context engineering ensures continuity: remembering the customer’s name, last viewed products, and active promotions relevant to them.

Key features:

- Vibe Coding:

- Casual, playful tone for product browsing.

- Professional, clear tone for payments.

- Empathetic tone for complaints or returns.

- Context Engineering:

- Remembers user name, preferences, previous browsing history.

- Dynamically injects contextual promotions.

- Architecture diagram description:

Example prompt template:

“You are an assistant for a fashion brand. Speak casually and warmly when helping a customer browse, but be formal and precise during payment guidance. Current user preferences: [color], [size], [last-viewed item]. Current user name: [name].”

AI Tutor with Progressive Context Memory

Scenario:

A personalized AI tutor helps students improve math skills by adapting lesson plans and tone based on progress and sentiment..

How vibe coding and context engineering work together:

- The tutor maintains a progressive memory of each student’s learning history:

- Tracks topics completed, areas of difficulty, and preferred learning styles.

- Tone adapts based on performance:

- Encouraging and upbeat when students struggle.

- Motivational and celebratory when students succeed.

- Context engineering retrieves past exercises and integrates them into feedback, ensuring the experience feels tailored and responsive.

Key features:

- Vibe Coding:

- Encouraging, motivational tone when students struggle.

- Celebratory tone when students achieve milestones.

- Context Engineering:

- Maintains long-term progress records.

- Retrieves history of exercises and common mistakes for targeted feedback.

Architecture diagram description:

Example prompt template:

“You are a supportive tutor. Encourage the student when they express doubt. Reference previous lessons on [topic] from [date]. Current student name: [name]. Current difficulty: [difficulty score].

Helpdesk Agent with Sentiment-Aware Tone-Shifting

Scenario:

A telecom company implements a virtual agent to handle Level 1 support before escalation to human agents.

How vibe coding and context engineering work together:

- Sentiment analysis tools detect emotional cues in customer input (e.g., frustration, confusion).

- The AI agent shifts tone accordingly:

- Calm and empathetic when frustration is detected.

- Friendly and casual for general queries.

- Context engineering ensures the agent knows the customer’s service history and recent complaints, so customers don’t have to repeat themselves.

Key features:

- Vibe Coding:

- Empathetic tone if sentiment = frustration.

- Efficient, professional tone for billing issues.

- Context Engineering:

- Fetches account history, recent complaints, service outages.

- Avoids repetitive questions by remembering current case status.

Architecture diagram description:

Example prompt template:

“You are a telecom support agent. If sentiment is negative, adopt an empathetic, calming tone. If sentiment is neutral, maintain a helpful and professional tone. Reference account issues from CRM: [account_id], [last_ticket], [open_orders].”

As AI rapidly advances, the future points clearly toward emotionally intelligent, context-aware systems that don’t just respond with accurate information but communicate in ways that feel natural, empathetic, and human-like. Users expect interactions with AI to be seamless, personalized, and respectful — and achieving this requires more than technical precision; it demands emotional sensitivity and situational awareness.

The Future of Emotionally Intelligent, Context-Aware AI

Next-generation AI systems will:

- Recognize not just what a user says but how they feel when saying it.

- Maintain long-term relationships with users, remembering preferences, history, and patterns.

- Shift tone and style gracefully across cultures, languages, and communication contexts.

- Operate across channels with consistency while adapting to unique channel characteristics (e.g., brevity for SMS, conversational flow for voice).

Vibe Coding and Context Engineering will become fundamental capabilities — not optional features — in delivering this elevated experience.

Role of Vibe Coding & Context Engineering in Agentic AI Evolution

Agentic AI, defined by autonomous agents that can act intelligently and independently on behalf of users, depends critically on:

- Vibe Coding: Crafting emotionally appropriate, brand-aligned communications that build trust and engagement.

- Context Engineering: Managing user history, preferences, goals, and environmental data to inform decision-making and actions.

In this way, Vibe Coding and Context Engineering empower agents to go beyond “transactional bots” toward becoming true digital companions, assistants, and collaborators — able to anticipate needs, maintain rapport, and respond appropriately at every turn.

Tips for Developers, Designers, and Architects

For developers:

- Build workflows that separate tone management and context enrichment as modular, testable components.

- Implement fallback strategies to handle incomplete context gracefully.

- Prioritize performance by designing efficient context storage and retrieval mechanisms.

For designers:

- Define clear tone-of-voice guidelines aligned to brand values and user expectations.

- Prototype and user-test tone variations to avoid unintended emotional impact.

- Design interfaces that allow transparency and user control over personalization.

For architects:

- Architect systems for scalability — enabling fast context lookup, secure storage, and smooth integration with LLM APIs.

- Enforce privacy-by-design principles, especially for context memory and personalization features.

- Ensure tone and context modules are adaptable for multi-lingual, multi-cultural scenarios.

Final thought:

The path forward is clear: human-centered AI will be defined not just by what it knows, but by how it communicates and adapts. Vibe Coding and Context Engineering together form the foundation for trustworthy, relatable, and intelligent AI agents that can truly serve and delight users — globally and empathetically.