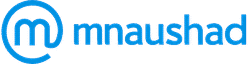

Large Language Models (LLMs) are the backbone of modern Generative AI systems. They enable capabilities such as conversational AI, text generation, summarization, code assistance, and much more.

As organizations adopt LLMs to build intelligent applications, one of the first strategic decisions they face is choosing between Open LLMs and Closed LLMs.

This section provides a clear definition of each category, highlights the key differences, and offers practical insights to help you select the right approach for your business or project.

What are Open LLMs?

Open LLMs are language models whose:

· Pre-trained

model weights are publicly available

· Architectures

and training methodologies are transparent

· Licensing

terms (often permissive or open-source) allow users to:

- Download

and run models locally - Fine-tune

models on private data - Deploy

models within their own infrastructure - Audit,

modify, or extend models for specific use cases

Examples of Popular Open LLMs:

- Meta’s

LLaMA 2 & 3 - Mistral’s

7B & Mixtral - Falcon

LLM (TII) - GPT-NeoX

and GPT-J (EleutherAI) - BLOOM

(BigScience Project)

Open LLMs empower developers, researchers, and

organizations to build custom AI solutions while maintaining control over

infrastructure, privacy, and costs.

What are Closed LLMs?

Closed LLMs (also called

proprietary LLMs) are language models developed by private organizations,

where:

· Model

weights are not publicly released

· Access

is provided through controlled channels, typically via APIs

· The

internal architecture or training data details are often partially or fully

restricted

· Licensing

governs how outputs or integrations can be used

Examples of Popular Closed LLMs:

- GPT-4

& GPT-4o (OpenAI) - Claude

(Anthropic) - Gemini

(formerly Bard) (Google DeepMind) - Cohere’s

Command R series

Closed LLMs often represent the cutting edge of AI

capabilities but operate under strict platform, usage, and pricing policies.

Key Differences: Open LLMs vs Closed LLMs

|

Aspect |

Open LLMs |

Closed LLMs |

|

Model Access |

Downloadable, self-hosted |

Access via API or limited platforms |

|

Transparency |

Open architecture |

Partial or no |

|

Customization |

Full fine-tuning, domain adaptation allowed |

Limited to prompt engineering or fine-tuning APIs (if provided) |

|

Deployment Control |

Run |

Hosted by the |

|

Data Privacy |

Keeps sensitive data within your environment |

Data often transmitted to vendor’s servers |

|

Cost Structure |

One-time |

Pay-per-use |

|

Innovation Pace |

Rapid community-driven updates |

Vendor-driven with periodic major releases |

|

Compliance & |

Easier to |

Requires |

|

Performance |

Varies by model, often catching up to top-tier closed models |

Leading-edge capabilities, especially for reasoning, multi-modal tasks |

Choosing Between Open and Closed LLMs

The right choice depends on:

· Data Sensitivity: Open LLMs are ideal for

environments where data privacy, sovereignty, or compliance is critical.

· Control Requirements: Organizations

seeking full control over AI behavior, deployment, and customization often

prefer Open LLMs.

· Performance Needs: For the latest, most

advanced reasoning or multi-modal capabilities, Closed LLMs may offer better

out-of-the-box results.

· Cost Considerations: Open LLMs reduce

long-term operational costs but require initial infrastructure investment.

Closed LLMs offer faster experimentation but ongoing pay-per-use costs.

· Speed to Market: Closed LLMs simplify

rapid prototyping via APIs. Open LLMs are better suited for building deeply

integrated, production-grade systems.

Real-World Example Scenarios

|

Scenario |

Recommended LLM |

|

Building a |

Open LLM, self-hosted on secure infrastructure |

|

Rapidly prototyping |

Closed LLM |

|

Creating a custom, |

Open LLM fine-tuned on proprietary data |

|

Developing a |

Closed LLMs |

Both Open and Closed LLMs play vital roles in

the Generative AI ecosystem. By understanding their differences, organizations

can make informed decisions aligned with their technical, security, and

business objectives